Week of June 7th

What I did

- PyTorch introduction and learning to use Tensorboard

- Mounting Google Drive data in Colab so your results don’t get thrown out after 24hrs

- Implement Tensorboard in Colab

- PyTorch Lightning

- Implement a neural network from scratch with pytorch (more useful for understanding than the Jupyter notebook crap with complicated data loaders to deal with)

- TUM lecture on Hyperparmeter tuning part 2

- Switched from PyLint to flake-8 because PyLint wasn’t playing nice with PyTorch

What I learned

- To mount drive in Colab

from google.colab import drive

drive.mount("/content/gdrive", force_remount=True)

Then point it to the directory with the py scripts needed to be imported. Also install any packages not installed in colab by default.

import sys

sys.path.append('/content/gdrive/My Drive/Colab Notebooks/')

!pip install pytorch_lightning

Then makes sure GPU or TPU acceleration is enabled in colab and run the following:

if torch.cuda.is_available():

device = torch.device("cuda")

else:

device = torch.device("cpu")

print(str(device))

- Choosing a loss function. L2 is more computationally efficient than L1, but is more prone to outliers (i.e. lots of outliers in the data set getting squared could screw up your NN training). Cross entropy loss is like the loss version of soft-max. For Softmax, you take training example 1, dot it with the theta params, “e” it, and normalize it by diving by the same values for the other examples. The cross entropy loss is just the negative log of it.

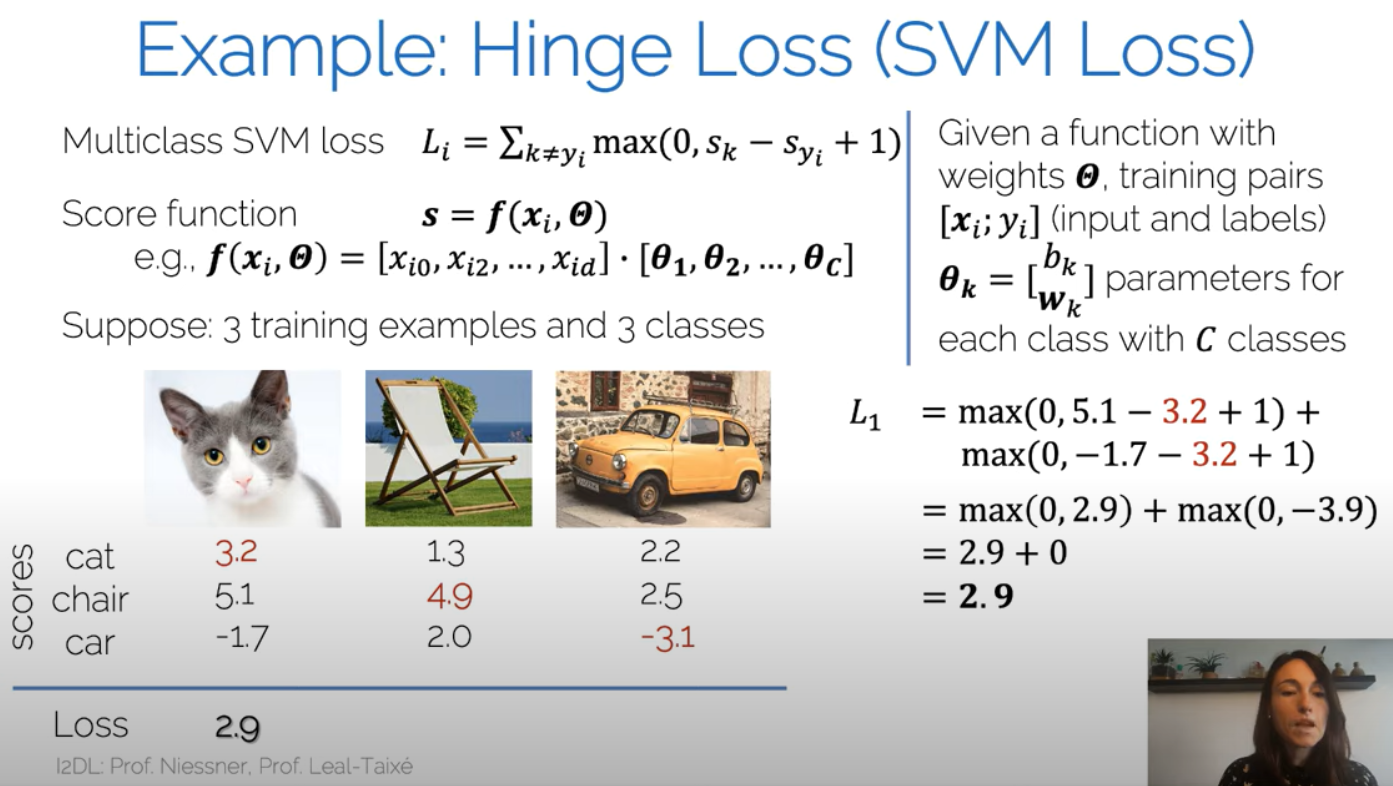

i is the layer of the neural net. The layer could be your training examples or actually the values for each node at a hidden layer. Hinge loss is more interesting. Hinge loss doesn’t penalize correct predictions if the it is confident enough.

\[L_i = \Sigma \space \text{max}(0, S_k - S_{true}+1)\]It’s easier to just look at this slide lecture slide

What I will do next

- I left off on Saturday night with Colab’s GPU performance being outperformed by my laptop’s CPU. I need to figure out what’s up.